We introduce Zero-1-to-3, a framework for changing the camera viewpoint of an object given just a single RGB image. To perform novel view synthesis in this under-constrained setting, we capitalize on the geometric priors that large-scale diffusion models learn about natural images. Our conditional diffusion model uses a synthetic dataset to learn controls of the relative camera viewpoint, which allow new images to be generated of the same object under a specified camera transformation. Even though it is trained on a synthetic dataset, our model retains a strong zero-shot generalization ability to out-of-distribution datasets as well as in-the-wild images, including impressionist paintings. Our viewpoint-conditioned diffusion approach can further be used for the task of 3D reconstruction from a single image. Qualitative and quantitative experiments show that our method significantly outperforms state-of-the-art single-view 3D reconstruction and novel view synthesis models by leveraging Internet-scale pre-training.

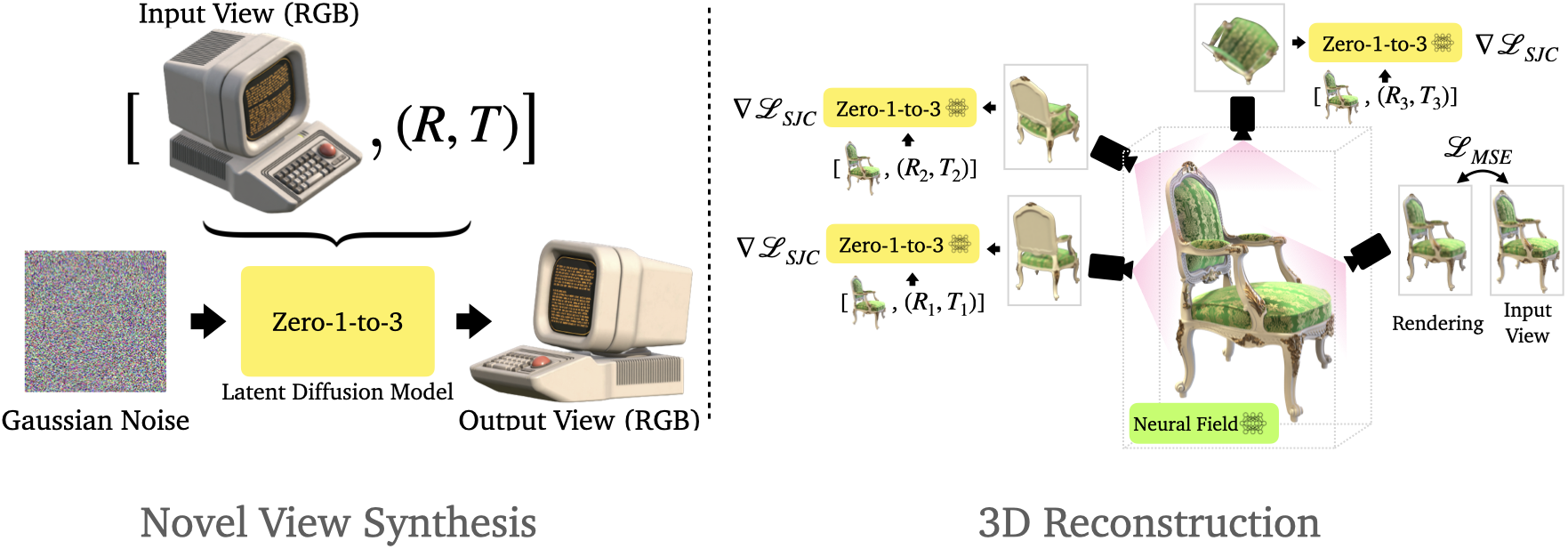

We learn a view-conditioned diffusion model that can subsequently control the viewpoint of an image containing a novel object (left). Such diffusion model can also be used to train a NeRF for 3D reconstruction (right). Please refer to our paper for more details or checkout our code for implementation.

Here are some uncurated inference results from in-the-wild images we tried, along with images from the Google Scanned Objects and RTMV datsets. Note that the demo allows a limited selection of rotation angles quantized by 30 degrees due to limited storage space of the hosting server. If you want to try out a fully custom demo running on a GPU server which allows you to upload your own image, please check the live demo above or run one locally with our code!

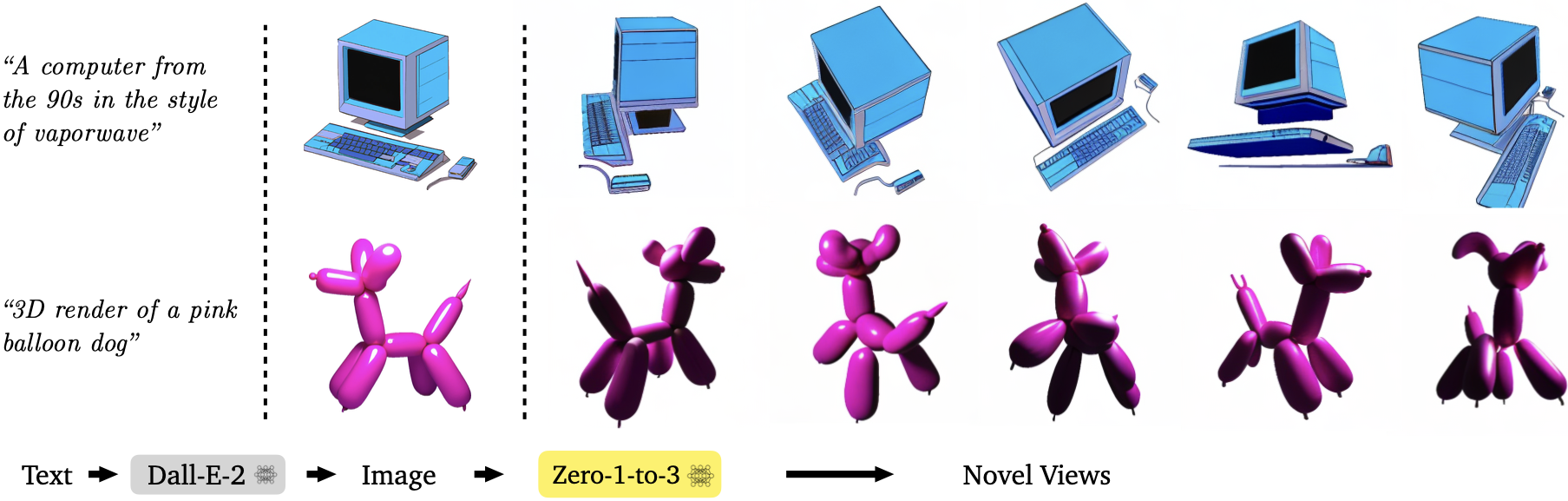

Here are results of applying Zero-1-to-3 to images generated by Dall-E-2.

Here are results of applying Zero-1-to-3 to obtain a full 3D reconstruction from the input image shown on the left. We compare our reconstruction with state-of-the-art models in single-view 3D reconstruction.

Score Jacobian Chaining: Lifting Pretrained 2D Diffusion Models for 3D Generation

DreamFusion: Text-to-3D using 2D Diffusion

SparseFusion: Distilling View-conditioned Diffusion for 3D Reconstruction

NeuralLift-360: Lifting An In-the-wild 2D Photo to A 3D Object with 360° Views

NeRDi: Single-View NeRF Synthesis with Language-Guided Diffusion as General Image Priors

RealFusion: 360° Reconstruction of Any Object from a Single Image

@misc{liu2023zero1to3,

title={Zero-1-to-3: Zero-shot One Image to 3D Object},

author={Ruoshi Liu and Rundi Wu and Basile Van Hoorick and Pavel Tokmakov and Sergey Zakharov and Carl Vondrick},

year={2023},

eprint={2303.11328},

archivePrefix={arXiv},

primaryClass={cs.CV}

}